⚡ZurzAI.com⚡

Companies Similar to RaiderChip

Graphcore

Graphcore technology accelerates AI innovation, enabling faster experimentation and breakthroughs that enhance human potential for healthier, fairer, and more sustainable living.

Graphcore is focused on advancing the field of artificial intelligence (AI) through its innovative computing technology. Central to its operations is the development and deployment of Intelligence Processing Units (IPUs), which are designed specifically to enhance machine intelligence. By targeting industries such as finance, healthcare, scientific research, telecommunications, consumer internet, and academia, Graphcore aims to redefine how industries leverage AI for innovation, efficiency, and enhanced outcomes.

Key Focus Area: Graphcore's key focus is on providing highly specialized processing power for AI workloads. Its primary product, the IPU, is designed to accelerate machine intelligence tasks that require complex computations and large data sets. This includes applications in natural language processing, computer vision, and graph neural networks across various sectors.

Unique Value Proposition and Strategic Advantage:

- Wafer-on-Wafer Technology: Graphcore’s Bow IPU, utilizing Wafer-on-Wafer (WoW) technology, offers significant enhancements in computational power and efficiency. This novel stacking technology enables IPUs to achieve up to 350 teraFLOPS of AI compute, delivering better performance than previous models.

- Massive Parallel Processing: IPUs are structured for fine-grained, high-performance computing, with architecture optimized for AI workloads that operate in parallel. This architecture supports scalable, efficient processing needed by contemporary AI applications.

- Integration with Popular AI Frameworks: Graphcore’s Poplar software stack works seamlessly with widely-used machine learning frameworks such as TensorFlow and PyTorch, simplifying adoption and deployment.

Delivery on Value Proposition: Graphcore delivers on its value proposition through a series of meticulously developed products and services:

- Hardware Solutions: Graphcore provides scalable IPU solutions like the Bow Pod series, which ranges from small-scale exploratory systems (Bow Pod16) to large-scale deployment configurations (Bow Pod256). These systems cater to diverse computational needs from development to full-scale production.

- Cloud Connectivity: Graphcore offers cloud-based solutions, enabling businesses to access IPU technology without on-premises installations. This allows easy scalability and access to the latest AI capabilities.

- Cross-Industry Applications: By targeting key industries, Graphcore customizes its IPU offerings for specialized applications such as fraud detection in finance, drug discovery in healthcare, and even particle physics simulations in research. Each application is optimized to utilize the parallel processing power of IPUs effectively.

- Collaborative Innovations and Research Partnerships: Graphcore collaborates with major tech companies and academic institutions, facilitating the integration of its IPUs into cutting-edge projects and research initiatives that drive AI forward.

In summary, Graphcore's strategy hinges on its specialized IPU technology, which provides scalable, efficient, and robust solutions for complex AI challenges. Its innovation in processing technology and strategic partnerships positions it to meet the evolving AI demands across various global industries.

LeapMind

LeapMind researches original chip architectures to implement Neural Networks on a circuit with low power.

LeapMind Inc., a company focused on AI technology and solutions, has announced its dissolution effective July 31, 2024. This decision is attributed to various unspecified circumstances, despite efforts in developing AI solutions such as the quantization machine learning model development environment "Blueoil," ultra-energy-efficient AI accelerators, and pre-trained models under the brand name "Efficiera." The company also engaged in the development of large-scale learning chips, such as "Octra," designed for next-generation large language models (LLMs).

Key Developments and Products:

-

Efficiera Technology:

- Efficiera IP: This ultra-low power AI accelerator IP achieved leading performance in power, performance, and area efficiency. It facilitates effective AI model deployment on edge devices, marking a goal of 107.8 TOPS/W (Tera Operations Per Second per Watt).

- Efficiera Models: The development of models focused on extreme quantization, reducing bit representation down to 1-bit, which allows AI models to function effectively on edge devices with constrained computing resources and power.

- Efficiera SDK: A comprehensive AI software suite that includes tools for compiling AI models and deploying applications in production environments on edge devices. The SDK provides libraries and tools for extreme quantization and efficient AI model execution.

-

AI Solutions and Applications:

- Image Processing Applications:

- High-accuracy noise reduction and image enhancement, applicable to several domains such as smartphone cameras and industrial imaging.

- Techniques like image deblurring and super-resolution, enabling clearer image and video quality, particularly in challenging conditions.

- Image Recognition Applications:

- Object detection models capable of high-speed inference on edge devices, supportive of various sectors including surveillance and transportation.

- Segmentation and pose estimation technologies enabling detailed analysis and categorization of images in applications such as autonomous driving and industrial inspection.

- Anomaly detection models that identify irregularities from learned normal patterns, aiding in quality control and safety monitoring.

- Image Processing Applications:

-

Additional AI Technologies:

- The company advanced its offerings with application-specific performance tuning, allowing the extraction of maximum efficiency and speed from models deployed on minimal hardware, such as FPGAs.

- LeapMind's technology aims to integrate deep learning capabilities into compact devices across diverse industries, from consumer electronics to heavy machinery.

LeapMind has communicated that any customer data held as part of past contracts will be securely disposed of following ethical and legal standards. The company's efforts to address contemporary challenges such as high semiconductor costs and performance stagnation through its innovative AI solutions were integral to its mission of making AI accessible and user-friendly for widespread implementation. Despite its closure, LeapMind’s advancements in AI technology have contributed to potential efficiency improvements and increased AI capabilities at the edge.

Mythic

AI hardware solution focusing on edge computing to enable high-performance AI inference in low-power devices. Mythic focuses on AI hardware for edge computing to enable high-performance AI inference in low-power devices.

-

Key Focus Area: Mythic concentrates on providing power-efficient AI acceleration particularly at the edge, as well as on cloud platforms. Their emphasis is on enabling high-performance AI inference through their specialized analog computing technology, which is effective in applications that suffer from limitations due to high power consumption and computational inefficiency commonly associated with digital processors. Their products serve diverse markets such as intelligent machines, smart homes, AR/VR, drones, aerospace, and smarter cities.

-

Unique Value Proposition and Strategic Advantage:

- Analog Compute-in-Memory Technology: Mythic’s proprietary analog compute-in-memory architecture is at the core of their competitive advantage. Unlike traditional digital computing, their solutions store AI parameters within the processor, doing away with memory bottlenecks, thereby reducing energy consumption and enhancing speed.

- Power and Cost Efficiency: Their analog processors are noted for delivering similar computing capability as a GPU with as little as one-tenth of the power consumption and at much lower costs. This is positioned as a strategic advantage over digital counterparts, which face operational limits due to energy requirements and costs.

- Scalability and Flexibility: The design of the Mythic AMP allows deployments from small edge devices to data centers, offering flexibility and future-proofing for developers as demands for performance may increase.

- Delivery on Value Proposition:

- Product Offerings: Mythic’s key products, such as the M1076 Analog Matrix Processor, MM1076 M.2 M Key Card, and ME1076 M.2 A+E Key Card, integrate their analog computing technology into various applications that demand AI inference with low power consumption and high performance.

- Software Ecosystem Integration: They ensure compatibility with existing AI training frameworks like PyTorch and TensorFlow, simplifying integration with existing AI workflows. Their software stack optimizes and compiles neural networks, enhancing ease of adoption and performance.

- Market and Application Focus: Mythic targets diverse sectors including smarter cities for surveillance, intelligent machines for manufacturing efficiency, AR/VR environments for interactive experiences, and drones for secure data processing. By focusing on how their technology can be integrated seamlessly into these applications, they address industry-specific challenges such as latency reduction, cost efficiency, and system resilience.

Overall, Mythic positions its technology as a game changer for AI infrastructure, providing an efficient alternative to the incumbent digital AI processing methods, with a particular focus on reducing energy consumption while maintaining high computational performance.

Habana

A leader in chip and semiconductor technology. A company that provides deep learning acceleration hardware and software that helps with high-performance computing and data center workloads.

About | About | About | About | About | About | About | About | About | About | About | About | News

Habana Labs, owned by Intel, primarily focuses on developing advanced AI accelerators, notably the Intel® Gaudi® series. These products are dedicated to enhancing performance and efficiency in AI computing, particularly for deep learning and generative AI applications.

Key Focus Area:

- The company's main focus lies in high-performance, cost-efficient AI accelerators tailored for training and inference of deep learning models. Their products are significant for industries including autonomous vehicles, healthcare, fintech, and retail that rely heavily on AI functionalities.

Unique Value Proposition and Strategic Advantage:

- Price-Performance Efficiency: Habana's Gaudi accelerators claim a significant edge in price-performance metrics, offering up to 40% better price-to-performance ratios than comparable Nvidia GPU solutions in some configurations.

- Scalability: The Intel Gaudi architecture includes features like integrated 100 GbE ports on every processor, enabling flexible and cost-effective scalability, a rare feature in AI chips that focuses on reducing total cost of ownership.

- Choice in AI Processing: By offering customisable hardware solutions such as air and liquid cooled versions, PCIe cards, and integrated server systems, Habana enables enterprises to choose configurations that suit their unique needs in terms of performance, scale, and power usage.

Delivery on Value Proposition:

- Hardware and Software Integration: Habana offers a comprehensive hardware-software ecosystem, making it easier to adopt and transition to their technology. Their software suite supports popular AI frameworks such as TensorFlow and PyTorch, streamlining the migration from existing GPU-based models.

- Cloud and On-Premise Deployment: The company positions its products for flexible deployment, allowing use within cloud environments (through partnerships with CSPs like AWS) and on-premises via setups from partners such as Supermicro.

- Developer Support and Community: Habana provides extensive resources for developers—such as tutorials, GitHub repositories, and community forums—which facilitate easier adoption and optimization of AI models on Gaudi processors, supporting both smooth transitions and innovations in AI model building.

Applications in Industry:

- Healthcare: AI for medical imaging analysis and diagnostic support has been a significant area, where Habana's technology aids in reducing R&D costs and speeding up research.

- Autonomous Vehicles: Gaudi processors support AI models in autonomous vehicle tech through efficient, scalable machine learning solutions.

- Financial Services: AI applications in fintech harness Habana processors to improve operational efficiencies in areas like customer service automation and real-time payment processing.

- Retail: In retail, AI-powered surveillance systems and inventory management are enhanced via Habana's solutions, enabling smart store technologies and checkout-free systems.

Overall, Habana Labs seeks to empower various industries with efficient, scalable AI solutions that are competitively priced, while providing flexible deployment options through their Gaudi AI processors and optimized software suite, embracing the growing needs of AI in enterprise applications.

Fotographer AI

Fotographer AI empowers full control image generation for users, with unprecedented ease and quality using its innovative middleware, Fuzer.

Fotographer AI is focused on enhancing creativity through AI-driven image generation and editing solutions. Their primary offering is a suite of AI tools designed to streamline the process of creating high-quality images with ease and efficiency, suitable for applications ranging from product photography to animation and design.

Key Focus Area

The company's main focus is providing AI-enhanced tools for visual content creation. These tools serve multiple creative needs, such as background generation, image lighting adjustments, and precision background removal, aimed at professionals in photography, e-commerce, advertising, and creative industries.

Unique Value Proposition & Strategic Advantage

Fotographer AI's unique value proposition is grounded in its generative control technology, offering users the ability to produce images with high precision while preserving the integrity of the subject's features and colors. This technology enables seamless model switching without sacrificing quality, facilitating versatile creative workflows that encompass a wide spectrum of visual styles and complexities. By providing comprehensive tools under one platform, Fotographer AI positions itself as a one-stop solution, potentially lowering operational costs for users by consolidating multiple image processing functions.

Delivering on Value Proposition

Fotographer AI delivers on its value proposition by providing:

-

Background Generation & Removal: Tools that automatically and accurately remove backgrounds, allowing for diverse and appealing composite backgrounds. This is facilitated by template options that serve different aesthetic needs, from minimalist to elaborate scenes.

-

Relight Feature: A tool that allows users to intuitively adjust image lighting. This feature acknowledges image depth to enable nuanced lighting adjustments that mimic being on set, thus bringing realism to digital compositions.

-

Custom Training: The ability to teach the AI using only a handful of images, which can then generate consistent visual content from these trained models. This feature caters particularly to industries requiring character generation and consistent thematic visuals like animation and gaming.

-

AI Director: This tool simplifies the creative process by suggesting optimal prompts for image generation, streamlining user engagement with the AI.

-

API Access: Offerings that include scalable API solutions for developers seeking to integrate these capabilities into their own platforms, thus expanding the tool's reach to various business sizes and types.

Fotographer AI also supports users with a diverse range of pricing plans, from starter packages suitable for individuals to enterprise solutions offering customizability and enhanced security. This tiered approach aims to cater to both small-scale users and large organizations, potentially offering efficiency gains and cost reductions.

Conclusion

Fotographer AI targets the burgeoning demand for AI-integrated creative processes, positioning itself as a versatile and automated solution for image creation and editing. Although the details provided are promotional, the company emphasizes practical applications across fields, indicating a focus on broad applicability and user empowerment through technology.

Raspberry AI

Raspberry AI helps retail companies design styles that are in high demand.

Raspberry AI Company Overview

Key Focus Area: Raspberry AI centers on utilizing generative AI to streamline and enhance the creative processes in the fashion industry. The company’s platform is geared towards fashion creatives and aims to facilitate the design process from conceptual sketches to photorealistic images, seamless print production, and AI-powered lifestyle photography.

Unique Value Proposition and Strategic Advantage: Raspberry AI’s unique value proposition is its ability to transform traditional labor-intensive design methods into efficient and cost-effective digital workflows. By leveraging AI, the platform significantly reduces the time and resources typically required in the fashion design process. Key features include:

- Design Automation: The capability to convert 2D sketches into photorealistic renderings instantly enables designers to save weeks of iterative prototype development.

- Cost Efficiency: The application reduces sample costs by 30%, achieving significant savings in production processes.

- Customizability: AI models are tailored to encapsulate a brand's unique style, providing companies with customized outputs that align precisely with their design ethos.

Delivery on Their Value Proposition: Raspberry AI delivers its promises through several advanced technological applications:

-

Sketch-to-Render Technology: This feature quickly converts sketches into high-fidelity renders, allowing designers to explore extensive creative possibilities without the necessity of physical samples. This not only improves design communication within teams but also accelerates the approval process with stakeholders.

-

Seamless Print Generation: The AI swiftly generates custom, royalty-free patterns that seamlessly repeat, providing fashion designers with a broad spectrum of print possibilities without traditional licensing constraints.

-

AI-Driven Lifestyle Photography: This functionality involves creating detailed, photorealistic lifestyle images using virtual models. By doing so, the platform enables brands to perform global, location-based photoshoots without physical constraints, supporting sustainability by reducing the carbon footprint associated with frequent travel.

-

Enhanced Design Tools: Raspberry AI incorporates tools for precise editing, infinite colorway adjustments, and the generation of technical drawings, all of which simplify complex design tasks, thus enhancing overall productivity for design teams.

-

Integrated Security and Privacy Measures: Raspberry AI guarantees full ownership and intellectual property rights to users over their creations, alongside robust privacy protocols ensuring data is neither shared nor used to train their AI models. For enterprise users, private cloud hosting solutions are available to enhance data security further.

By offering a comprehensive suite of tools designed to optimize and secure the design process, Raspberry AI positions itself as a strategic partner for fashion brands looking to innovate and compete in the fast-paced fashion industry.

Axelera AI

Axelera AI specializes in AI acceleration for edge computing applications, with a platform called Metis for handling computer vision inference.

Axelera AI is primarily focused on delivering high-performance AI hardware acceleration solutions designed for edge computing. Their key focus areas include generative AI, computer vision inference, and making AI accessible and efficient across various markets, including security, retail, industrial, and automotive sectors.

Unique Value Proposition and Strategic Advantage:

-

AI Acceleration Hardware: Axelera AI's Metis platform, including AI Processing Units (AIPUs) and acceleration cards like PCIe and M.2, claims to deliver unprecedented performance with high throughput and low energy consumption.

-

Integrated Hardware and Software Solutions: The company's offer encompasses hardware paired with an easy-to-use software stack, the Voyager SDK, which simplifies the deployment of AI models. This combined offering emphasizes usability, power efficiency, and cost-effectiveness.

-

Digital In-Memory Computing Technology: Axelera AI prides itself on using Digital In-Memory Computing (D-IMC) and RISC-V dataflow architectures. This technology minimizes data movement between memory and compute units, supposedly overcoming traditional memory bandwidth constraints and power inefficiencies.

-

Partnership and Ecosystem: Collaborations with companies like Arduino aim to enhance the accessibility of AI at the edge. By leveraging Arduino’s open-source community and Axelera’s hardware acceleration, the partnership aims to democratize AI innovations for a broader audience.

Delivery on Value Proposition:

-

Product Offerings: The Metis Compute Board and Accelerator Cards provide comprehensive solutions for AI inference at the edge. These products aim to bridge the gap between cutting-edge AI technology and practical implementation by offering ready-to-deploy systems that require minimal additional development from users.

-

Cost and Power Efficiency: Axelera’s technology is touted as being significantly more energy-efficient and cost-effective compared to traditional AI solutions like GPUs. This is achieved through their specialized architecture and quantization techniques that enhance output without compromising accuracy.

-

Flexible and Scalable Platform: Through the Metis evaluation systems, Axelera AI caters to a wide array of applications, including video analytics and quality inspection, capable of handling multiple high-resolution video streams simultaneously. The scalability of the platform allows it to integrate seamlessly with existing systems, enhancing its practicality and future-proofing capabilities.

-

Strategic Geographic and Market Expansion: With significant investment backing, Axelera AI plans to broaden its market reach into North America, Europe, and the Middle East. This expansion strategy aims to support the growing demand for efficient AI solutions and support computing needs across various new application domains including automotive and digital health.

-

Client Engagement and Community Building: Through events, partnerships, and an online store, Axelera AI encourages engagement, offering personalized demonstrations of their technology to drive client and community uptake.

Axelera AI is positioning itself as a key player in the edge AI space, focusing on hardware that claims to deliver high efficiency and performance while addressing cost and power consumption challenges. Their strategic partnerships and planned expansions underscore a commitment to evolving with market demands and enhancing AI accessibility globally.

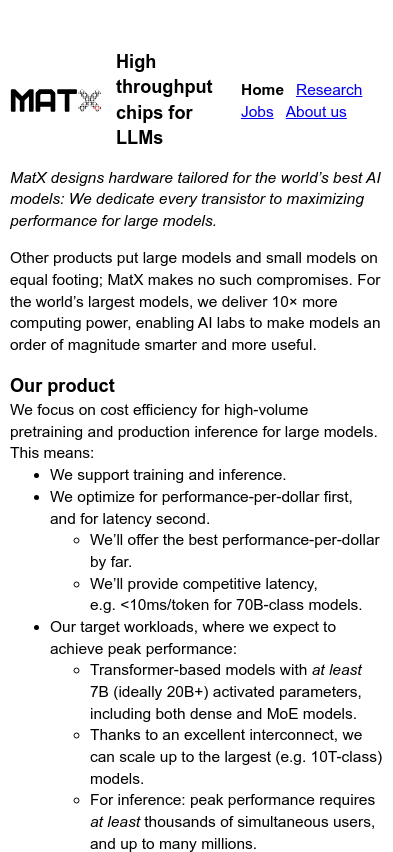

MatX

MatX is an AI chip startup that designs chips that support large language models.

Executive Summary of MatX

1. Key Focus Area MatX focuses on the development of high-performance hardware specifically designed for large-scale artificial intelligence models, with an emphasis on maximizing computing power for the largest AI models. Their chips are designed to deliver substantial computing power for large language models, ensuring that each transistor contributes maximally to the performance of these extensive models. This positions them in the niche market of hardware tailored for high-throughput AI applications.

2. Unique Value Proposition and Strategic Advantage MatX’s unique value proposition lies in its dedicated design approach that differentiates between the needs of large and small models, without compromise. Their strategic advantage includes:

- Delivering 10 times more computing power for large AI models, enabling advancements in AI model intelligence and utility.

- Offering superior performance-per-dollar, prioritizing cost efficiency for high-volume model pretraining and inference, which is critical for their target workloads.

- Providing hardware that allows leading AI models to become available 3-5 years sooner than they typically would, thus accelerating AI innovation and accessibility.

3. Delivery on the Value Proposition To deliver on this value proposition, MatX has implemented several strategies:

-

Hardware Optimization: By focusing on key technical metrics such as low-level control over hardware, efficient interconnects, and ability for scaling, MatX ensures optimal performance for demanding AI workloads, such as transformer-based models with over 7 billion activated parameters.

-

Support for Scale: Their technology supports clusters spanning hundreds of thousands of chips, crucial for both training and inference of large models.

-

Cost Efficiency: Their approach to optimizing training and inference costs is reflected in their ability to support performance-per-dollar and manage latency efficaciously. They optimize not only for the floating-point operations necessary for model training but also address the memory and computational demands during inference by introducing new attention models and architecture strategies.

-

Tools and Codebase Development: Introduction of tools like seqax, a simple and efficient codebase for LLM research, demonstrates their commitment to facilitating experimentation and innovation in AI, supporting scalability on up to 100 GPUs or TPUs.

MatX emphasizes balancing training and inference costs in AI model development, considering how architectural adjustments can improve efficiency and reduce costs. By addressing both the computational and memory demands during inference, they aim to provide solutions that are both economically feasible and technologically advanced. They further augment their offerings with strategic partnership and investment from specialists, solidifying their position in the market.

Conclusion MatX is capitalizing on the need for specialized hardware that caters to large-scale AI applications, offering solutions that address both immediate computational needs and future expansions. By focusing on cost efficiency and performance, along with comprehensive support for the development and deployment of large AI models, they empower organizations ranging from startups to large research labs to advance in AI capabilities.

Rebellions.ai

Rebellions.ai builds AI accelerators by bridging the gap between underlying silicon architectures and deep learning algorithms.

Rebellions, a South Korean semiconductor company, has centered its business on the development and production of energy-efficient AI inference chips. Their mission is to enable efficient and scalable generative AI solutions, focusing on the demands of contemporary and future AI workloads.

Key Focus Area: Rebellions specializes in crafting advanced AI chips, particularly for powering AI inference tasks. This involves creating semiconductors utilized primarily in data centers and cloud services, accommodating demanding AI applications, such as large language models and high-frequency trading.

Unique Value Proposition and Strategic Advantage: Rebellions positions itself as a strategic player in the field of AI semiconductors by:

-

Energy Efficiency: The chips developed by Rebellions emphasize maximum core utilization for high energy efficiency, especially for intensive AI workloads. Their architecture is designed to ensure no core is idle.

-

Scalability: Their products are designed to accommodate scalability from single chips to full data racks, facilitating growth with changing business needs. This modular and scalable design makes their technology versatile across various applications and industries.

-

Local Ecosystem and Strategic Partnerships: A key advantage is the local integration within the South Korean technological ecosystem, bolstered by partnerships with tech giants like Samsung Electronics. This positions them uniquely for significant domestic semiconductor capabilities while expanding globally.

Delivering on their Value Proposition: Rebellions advances its strategic goals and delivers on its value propositions through:

-

Product Development: The company offers products like the REBEL HBM3e chip, designed for hyperscale workloads with high compute density and the ATOM™ series focused on low-latency, efficient AI inference. These products leverage high-speed, energy-efficient processing suitable for diverse AI models.

-

Technology and Innovation: In development with Samsung, Rebellions uses cutting-edge fabrication processes, notably the 4nm and high-bandwidth memory technologies, to enhance product performance and efficiency.

-

Strategic Mergers and Investments: Rebellions’ merger with SAPEON Korea and securing of significant investments during funding rounds indicate strong backing and trust in its innovative capacity and market potential. Such capital allows further global expansion and talent acquisition, enhancing their development capabilities.

-

Engagement with the AI Ecosystem: Their software stack, including the RBLN SDK, supports a wide range of ML models and features, aiming to ease deployment and integration into AI services, ensuring products meet diverse AI infrastructure needs.

In summary, Rebellions aims to offer high-energy-efficiency AI chips that are scalable and suitable for a breadth of applications. While utilizing South Korea's semiconductor manufacturing capabilities and forging critical international partnerships, it strives to maintain its competitive edge in the rapidly evolving AI market.

Kneron

Kneron develops an application-specific integrated circuit and software that offers artificial intelligence-based tools.

Kneron specializes in developing on-device edge artificial intelligence (AI) solutions. The company's primary focus is on creating hardware and software systems that enable AI processing directly on devices instead of relying on cloud computing. This approach facilitates faster processing times, enhances data privacy, and lowers costs for integration into various consumer and industrial applications.

Unique Value Proposition and Strategic Advantage:

- On-Device AI Processing: Kneron's core advantage lies in its ability to provide AI solutions that process data directly on the device. This reduces reliance on cloud resources, thus addressing privacy concerns since data remains on the device rather than being transmitted over the internet.

- Reconfigurable Technology: Kneron’s patented Neural Processing Unit (NPU) technology allows real-time adaptability for different recognition tasks, supporting audio, 2D, and 3D image recognition needs while being compatible with major AI frameworks.

- Energy Efficiency: Their AI System on Chip (SoC) solutions offer a balance between performance and power efficiency, which is particularly important for battery-powered and portable devices.

- Cost-Effectiveness: By integrating AI computation on devices, Kneron reduces the overall cost structure for device makers, lowering time-to-market barriers and providing customizable, low-cost solutions.

Delivery on Value Proposition:

- AI System on Chip (SoC): Kneron’s AI SoCs are integral to their delivery strategy, combining neural network-powered processing with high energy efficiency. These chips serve various applications, including smart homes, vehicles, and security devices.

- Edge AI Models: Kneron develops lightweight AI models optimized for power and performance. These models are specifically designed for edge applications where space and power are limiting factors.

- KNEO Platform: Their KNEO AI APP STORE and KNEO Stem modules empower developers to create and deploy AI applications with ease, heralding a new era of AI applications akin to mobile apps.

- Custom Solutions: Kneron tailors integrated hardware and software solutions that enable partners to expedite on-device edge AI deployment and optimize for specific use cases such as smart vehicles, security, and IoT apps.

- Education Initiatives: Kneron is committed to fostering AI literacy through partnerships with educational institutions, providing learning resources and a platform to engage students and developers in AI innovation.

Through these efforts, Kneron aims to make edge AI accessible and widespread, positioning itself as a key player in revolutionizing how AI applications are developed and deployed across different industries.

Axelera

Axelera is working to develop AI acceleration cards and systems for use cases like security, retail and robotics.

Key Focus Area

Axelera AI is dedicated to advancing AI technologies with a focus on edge computing solutions. Their primary industry application is in accelerating inferencing for computer vision tasks within edge devices. They aim to enable efficient, cost-effective, and high-performance AI solutions specifically tailored for applications in the security, retail, and industrial sectors, among others. This approach allows users to process data insights rapidly and efficiently without the constraints typically associated with traditional cloud-based AI systems.

Unique Value Proposition and Strategic Advantage

Axelera AI's distinctive advantage lies in their development of a comprehensive hardware and software ecosystem, specifically designed for edge AI applications. The heart of their offering is the Metis AI Processing Unit (AIPU) which utilizes proprietary Digital In-memory Computing (D-IMC) technology in conjunction with RISC-V architecture. This combination promises to deliver:

- High Performance and Efficiency: The Metis AIPU can achieve up to 214 TOPS, offering significant computational power while maintaining energy efficiency.

- Cost Effectiveness: By utilizing D-IMC and standard CMOS technologies, Axelera's solutions aim to be more cost-effective than traditional GPU-based alternatives.

- Scalability: Their hardware can be scaled and integrated into existing systems using familiar interfaces like PCIe and M.2, which facilitates ease of deployment and future-proofing for users.

Delivering on Value Proposition

Axelera AI fulfills its pledge to provide cutting-edge AI acceleration through several initiatives:

-

Technological Innovation: Their Metis AIPU and the accompanying Voyager SDK offer an integrated hardware-software solution that simplifies deploying AI models on edge devices. The D-IMC technology ensures high computational efficiency while reducing power consumption.

-

Ecosystem Partnerships: Collaborations with established platforms such as Arduino expand the accessibility and applicability of their AI solutions. These partnerships aim to integrate high-performance AI capabilities into a broader range of devices and applications.

-

Product Offerings: Axelera provides hardware options, including evaluation kits and AI acceleration cards, that simplify the integration of AI into existing infrastructure, thus reducing both time-to-market and operational costs for end users.

-

Application Versatility: Their solutions are suitable for multiple applications such as multi-channel video analytics, safety monitoring, and real-time operational intelligence, enabling a diverse range of industries to leverage AI efficiently.

-

Sustainability Focus: Axelera AI promotes running high-performance AI applications on-device, reducing dependency on power-intensive cloud solutions and contributing to lower carbon emissions.

In sum, Axelera AI strategically positions itself to accelerate AI innovation, empowering industries to deploy efficient edge AI applications with reduced cost and energy consumption while maintaining significant computational capability.

EdgeQ

EdgeQ intends to fuse AI compute and 5G within a single chip. The company is pioneering converged connectivity and AI that is fully software-customizable and programmable.

1) What is this company's key focus area?

EdgeQ focuses on advancing wireless infrastructure, emphasizing the integration of 4G, 5G, and Artificial Intelligence (AI) within a robust, scalable, and economically efficient framework. The company positions itself at the intersection of connectivity and compute technology, providing solutions that fuse telecommunications with artificial intelligence enhancements.

2) What is their unique value proposition and strategic advantage?

-

Base Station-on-a-Chip: EdgeQ is credited with introducing the industry's first Base Station-on-a-Chip, combining 4G, 5G, and AI capabilities in a singular, compact silicon that oversees functionality traditionally managed by multiple devices. This offering grants unprecedented cost and power savings, utilizing just one-chip for infrastructure demands.

-

Open, Software-Defined Architecture: Their approach is uniquely software-defined and fully programmable, allowing operators to configure and upgrade networks effortlessly through software, circumventing the traditional costs associated with hardware changes. This provides flexibility in deployment and reduces operational burdens.

-

O-RAN (Open Radio Access Network) Compatibility: By aligning with the principles of O-RAN, EdgeQ supports standardized interfaces, which facilitate interoperability among different vendors, further reducing the complexity and costs associated with deploying and managing wireless network infrastructure.

3) How do they deliver on their value proposition?

-

Integrated Comprehensive Solution: EdgeQ's Base Station-on-a-Chip offers an all-in-one solution that integrates baseband processing, network processing units (NPUs), CPUs, and AI capabilities. This highly consolidated approach optimizes network deployment by providing scalability and efficiency.

-

Customization and Upgradability: EdgeQ utilizes a software-first approach that allows for considerable customization and over-the-air upgradability. Thus, enterprises can dynamically adjust spectrum usage and scale operations as needed without the need for physical infrastructure changes.

-

Mission-Critical Applications: By supporting private 5G solutions and power over ethernet setups, EdgeQ caters to mission-critical use cases requiring Ultra-Reliable Low Latency Communication (URLLC), making it viable for industries like industrial automation and real-time data processing.

-

Partnerships and Ecosystem Integration: Collaborations with industry leaders such as Vodafone emphasize EdgeQ's strategy to leverage its platform within existing and emerging infrastructure ecosystems, thereby validating their technologies' application and scalability in real-world deployments.

In summary, EdgeQ leverages its innovative Base Station-on-a-Chip technology, combined with a software-defined and open architecture, to deliver comprehensive, customizable, and cost-effective solutions for the evolving needs of wireless infrastructure. Their approach facilitates flexibility, adaptability, and enhanced networking capabilities for operators while aligning with modern telecommunications paradigms like O-RAN.

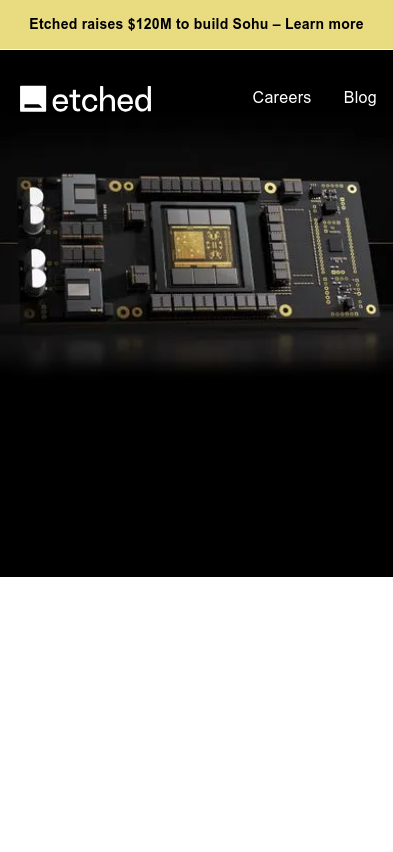

Etched.ai

Etched.ai is an AI chip startup that develops Sohu, a chip designed specifically for running transformer models.

Overview

Etched positions itself prominently in the AI hardware market by focusing on specialized chips for artificial intelligence called ASICs, particularly tailored for transformer models such as those used in most advanced AI systems today, including ChatGPT. Their flagship product, the Sohu chip, seeks to provide a faster and more cost-effective alternative to traditional GPUs.

Key Focus Area

- Specialization in AI Hardware: Etched develops application-specific integrated circuits (ASICs) specialized for transformers. These chips are crafted to outperform general-purpose GPUs in running transformer AI models.

Unique Value Proposition and Strategic Advantage

-

Increased Efficiency and Performance: By embedding transformer architecture directly into the hardware, Sohu promises significant performance improvements in terms of speed and cost when running transformer-based AI models. This specialization allows for a marked increase in throughput, with claims of over 500,000 tokens processed per second compared to NVIDIA’s next-gen GPUs.

-

Focused Design for Specific AI Models: Sohu is designed to exclusively handle transformer inference, a common task across modern AI applications like ChatGPT and image models like Stable Diffusion. This dedication to a specific model type allows for removing superfluous control-flow logic, enhancing the chip's utility for its intended applications.

-

Predictable Scalability: By concentrating specifically on scaling transformer architecture, the Sohu ASIC aims to meet and even anticipate the scaling demands of contemporary AI tasks without the need for comprehensive GPU programmability, resulting in better resource allocation for known workloads.

Delivery on Value Proposition

-

Specialized Hardware Optimization: Sohu’s design reduces reliance on generalized GPUs by specializing solely in transformer operations, enabling a higher density of computational power tailored directly to these tasks.

-

Open Source Software Support: Etched offers an open-source software stack to accommodate customized transformer layers, facilitating organizations’ specific requirements without the typical constraints associated with proprietary systems.

-

Real-World Application Testing: Through partnerships—like the one with Decart in developing the interactive AI model Oasis for generating video games—Etched highlights Sohu's capability to handle complex, compute-intense applications beyond theoretical constructs, augmenting the practicality of its claims.

Conclusion

Etched endeavors to carve out a niche for itself in the AI hardware landscape via Sohu, emphasizing transformative acceleration and cost efficiencies over general-purpose GPU solutions. This narrow focus on application-specific optimization, combined with an open-source approach to software, attempts to provide a compelling argument for its strategic advantage in handling burgeoning AI workloads. Nonetheless, prospective adopters should critically assess how the restricted utility beyond transformers fits their broader needs.

Liquid AI

Liquid AI focuses on developing advanced, general-purpose AI systems that align with human values and trustworthiness.

Liquid AI, an MIT spin-off based in Boston, primarily focuses on developing general-purpose AI systems that are capable, efficient, and scalable. Their efforts are centered around creating AI foundation models that leverage innovative architectures and theoretical foundations in machine learning, signal processing, and numerical linear algebra.

Key Focus Area: Liquid AI's main focus is the development of general-purpose AI systems through its Liquid Foundation Models (LFMs). These models are structured to handle various forms of sequential data across numerous industries, including financial services, biotechnology, and consumer electronics. Their LFMs, notably the LFM-7B, are designed to provide comprehensive AI functionalities that are both energy and memory efficient.

Unique Value Proposition and Strategic Advantage:

- Innovation in AI Architecture: Liquid AI emphasizes a shift from traditional transformer-based models, such as GPTs, to their own non-transformer-based architectures. This pivot reportedly allows their models to be highly efficient, offering superior performance with reduced computational demands.

- Multilingual and Multi-modal Capabilities: Liquid AI asserts that its LFMs excel in multiple languages, including English, Arabic, and Japanese, and are adaptable to various industry-specific applications.

- Low Memory Footprint: The architecture of LFMs ensures a minimal memory footprint, which is posited to lead to cost-efficiency in deployment and tuning processes.

- Strategic Collaborations and Funding: Liquid AI has garnered a significant $250M Series A funding primarily led by AMD Ventures, alongside collaborations with influential entities like Capgemini and ITOCHU, to scale their products and penetrate various markets effectively.

How They Deliver on Their Value Proposition:

- Model Deployment and Customization: Liquid AI offers models that can be finely tuned and deployed directly on-premises or on edge devices. Through their Liquid Engine, they provide high-performance AI solutions that are claimed to adapt to enterprise-specific needs with reduced latency and enhanced privacy.

- Comprehensive Model Stacks: The LFMs come with integrated inference and customization stacks that allow enterprises to adapt the technology for private, secure, and specific use cases.

- AI Integrated Platforms: Their products are available for trial and integration through several accessible platforms, such as Amazon Bedrock and the Liquid Playground, aiming to make LFMs versatile and easy to access for development teams.

- Rapport with Strategic Partners: They actively work on creating AI solutions in collaboration with partners, like their joint projects with Capgemini to develop new capabilities in AI domains such as edge solutions and enterprise AI transformations.

Liquid AI seems to position itself as a forerunner in the transition to advanced, efficient AI models tailored for broad applications, purporting to reduce carbon footprints associated with traditional AI infrastructure and facilitate easier deployment and customization for enterprises. Nonetheless, it's important to recognize these claims as part of a marketing narrative.

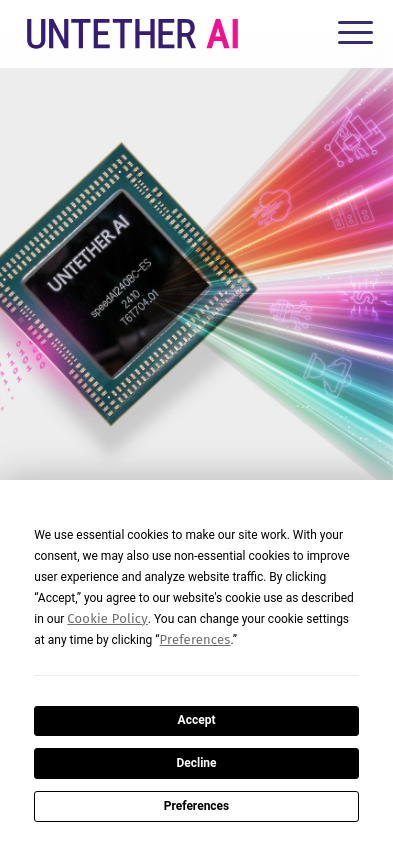

Untether AI

Untether AI focuses on improving AI inference efficiency and reducing energy consumption, developing the SpeedAI accelerator cards for enhanced performance in AI applications.

Key Focus Area

Untether AI is concentrated on enhancing AI inference performance for various applications through the creation of advanced hardware solutions. Their primary aim is to facilitate the deployment of artificial intelligence (AI) from the cloud to the edge across multiple sectors, such as AgTech, automotive, government, and financial services. They focus on accelerating AI inference tasks, promoting performance, energy efficiency, and cost-effectiveness.

Unique Value Proposition and Strategic Advantage

Untether AI’s unique value proposition lies in its novel at-memory compute architecture. This architecture positions the processing element directly next to memory cells, thereby reducing the power consumption associated with data movement, which traditionally dominates AI workloads. This setup diminishes the need for larger data transfers, leading to significant operational efficiency and exceptionally high compute density, making it particularly appealing for high-performance, power-sensitive AI applications. Their products offer energy-efficient AI operations, which claim to outperform traditional systems by providing higher throughput and lower power usage.

Delivery on the Value Proposition

To achieve their value proposition, Untether AI delivers a suite of products comprising integrated circuits (ICs), accelerator cards, and software development kits:

-

AI Inference Accelerator ICs: These include the runAI200 and the speedAI240. The ICs exploit an at-memory architecture for optimal compute density and low power consumption. They support a variety of AI workloads, from vision-based networks to transformer networks, catering to needs across automotive, finance, agriculture, and government sectors.

-

AI Accelerator Cards: Untether’s cards, such as the tsunAImi tsn200 and tsunAImi tsn800, are designed for high-demand inference tasks, delivering substantial performance in a power-efficient manner. They incorporate features suitable for both server-grade and edge applications, making AI deployment more broadly feasible.

-

Software Development Kit (imAIgine SDK): This SDK enables seamless model transition from popular frameworks like TensorFlow and PyTorch to Untether AI’s hardware. It offers a Model Garden and custom development flows that support the rapid deployment of AI solutions, emphasizing ease of integration and operational flexibility.

-

Target Markets and Applications: Untether AI focuses on sectors like agricultural technology (AgTech), automotive, financial services, and government applications, where AI can deliver substantial improvements in operational efficiency, safety, and sustainability. For example, in AgTech, AI assists in precision farming and crop monitoring, while in automotive, it enhances advanced driver-assistance systems (ADAS).

Overall, Untether AI emphasizes an innovative approach to overcoming AI deployment challenges, delivering higher performance and efficiency through a unique hardware design that benefits from reduced power consumption and increased density of compute resources. Their strategic partnerships and compliance with industry standards further support their market positioning in enabling AI across diverse operational environments.

Abacus.AI

Abacus.AI enables businesses to implement AI without needing expert developers by offering pre-trained models for tasks like customer service and forecasting. Abacus.AI offers pre-trained models for business tasks like customer service, simplifying AI implementation without expert developers.

News | About | About | About | About | About | About | About | About | About | About | About | About | About | Vision/Values | About | Vision/Values | About | About | About | About | About | About | About | About | About | About | About | About | About | About | About | About | About | About | About | About

-

Company's Key Focus Area: Abacus.AI is primarily focused on providing AI-driven solutions tailored for both individual professionals and large enterprises. Their main goal is to automate and enhance business processes through the use of AI technology. This includes a broad range of applications, such as predictive modeling, personalization, anomaly detection, and AI-based decision-making tools. They offer platforms and tools to build AI agents and chatbots, optimize resources through discrete optimization, and utilize vision AI for modeling tasks.

-

Unique Value Proposition and Strategic Advantage: Abacus.AI positions itself as an AI super-assistant that leverages generative AI technology to automate various business processes. Their strategic edge lies in their state-of-the-art AI capabilities, including structured machine learning, vision AI, and personalized solutions, along with a commitment to open-source generative AI models. They claim that their AI systems can enhance productivity and efficiency by automating complex tasks and reducing human intervention.

-

Delivery on Their Value Proposition: To deliver on its value proposition, Abacus.AI employs:

-

AI Super Assistants: Tools like ChatLLM and CodeLLM are designed to integrate AI capabilities across platforms, providing services like web search, image generation, and code editing.

-

Comprehensive AI Platform: For larger organizations, they offer a platform capable of building enterprise-scale AI systems, using AI to create and manage other AI agents and processes. This platform aims to automate tasks such as fraud detection, contract analysis, and personalized marketing.

-

Structured ML and Predictive Modeling: Abacus.AI provides tools to create machine learning models tailored to specific data inputs, ensuring accurate business predictions and process optimizations.

-

Vision AI and Optimization: These services offer advanced solutions for image analysis and optimizing business processes under given constraints, aimed at reducing costs and increasing efficiency.

-

Integration and Customization: The company offers integration with existing data systems, allowing for customization and personalized setups that fit specific business needs and enable contextual AI interactions.

-

Consultation and Support: They provide consultations to help enterprises tailor the AI solutions to their specific requirements and offer support throughout the implementation process.

-

Overall, their approach focuses on using cutting-edge AI models and deep learning techniques to build custom solutions that improve business process efficiency and decision-making.

NVIDIA

NVIDIA is a leading producer of AI hardware, renowned for its GPUs and plays a pivotal role in the AI chip market, being a revenue and volume leader.

-

Key Focus Area: NVIDIA's primary focus is on accelerated computing technology designed to power artificial intelligence (AI) and other computationally intensive tasks across various sectors. They cater to industries such as healthcare, automotive, gaming, manufacturing, and more, capitalizing on their hardware and software capabilities to optimize AI and data processing workloads.

-

Unique Value Proposition and Strategic Advantage: NVIDIA distinguishes itself by offering cutting-edge hardware like GPUs and AI supercomputers, and supporting software platforms that enable efficient AI computation and application. Their strategic advantage lies in the development of tailor-made solutions such as NVIDIA RTX, the Omniverse platform for 3D workflows and collaborative design, and cloud-based AI services. NVIDIA’s partnerships with key tech giants like Microsoft and Google for AI and data processing enrich their service offering, enabling comprehensive solutions for enterprise AI needs.

-

How They Deliver on Their Value Proposition:

-

Product Range: The company offers a wide spectrum of products, including GeForce graphics cards for gaming, the DRIVE platform for autonomous vehicles, and the DGX platform for AI training. Their offerings extend to software, such as the AI Enterprise suite and specialized AI tools for various industries.

-

Platform Initiatives: NVIDIA advances AI applications through initiatives like Project DIGITS, allowing local and cloud-based AI computational tasks, and Cosmos, which accelerates AI development for physical systems like robotics and autonomous vehicles. Their efforts in the metaverse, through the Omniverse platform, demonstrate their commitment to virtual simulation and 3D development.

-

Innovative Solutions: NVIDIA emphasizes high-performance computing (HPC) with solutions like CUDA-based parallel computing and deep learning frameworks that enhance scientific research and design processes. Their focus on delivering AI tools for sectors like healthcare and entertainment showcases their ability to impact diverse fields.

-

Collaborations and Partnerships: They strategically align with industry leaders such as Microsoft, Google, and Oracle to integrate their technologies into broader enterprise ecosystems. These collaborations enhance NVIDIA's reach and influence in deploying scalable AI solutions.

-

Training and Support: NVIDIA supports the adoption of its technologies through extensive resources, including technical training, support for developers, and comprehensive solution catalogs that facilitate easier implementation of their platforms and products.

-

These strategic initiatives enable NVIDIA to maintain its position in advancing AI capabilities and catering to an increasingly digital-first world, impacting industries by solving complex computational problems and innovating upon AI-driven solutions.

AMD

AMD is a key player in the AI hardware market, holding the position as the second player in market valuation, offering competitive chips like the MI350.

-

Key Focus Area: AMD primarily focuses on designing and manufacturing semiconductor products. Their key focus is on processors for various computing needs, including high-performance CPUs and GPUs for both personal and business use. They target multiple sectors such as gaming, data centers, personal computers, and professional workstations, emphasizing AI integrations into their product offerings.

-

Unique Value Proposition and Strategic Advantage: AMD positions itself as a provider of high-performance computing solutions that seamlessly integrate AI capabilities. The company markets its Ryzen™ processors as the first x86 processors with dedicated AI engines, claiming to bring advanced AI functionalities directly to PCs. This integration allows for local AI processing, enhancing privacy and security, which AMD considers a strategic advantage over cloud-dependent AI solutions. AMD emphasizes leadership in power efficiency and processing speed, presenting AMD Ryzen™ and EPYC™ processors as optimized for enterprise-grade performance with security and manageability features built-in.

-

Delivery on Value Proposition: AMD delivers on its value proposition by incorporating AI engines and adaptive SOCs into its processors, enabling users to manage intensive computational workloads and AI-enhanced tasks locally. They provide a comprehensive suite of processors that are optimized for specific industries—from gaming and high-performance computing to AI-driven business solutions and media production. AMD also collaborates with major OEMs and partners to ensure that their processors are widely available in consumer and enterprise products, promoting seamless integration. Additionally, they offer an extensive software ecosystem, including ROCm™ and professional management tools, to enhance the capabilities and integration of their hardware solutions. Product stability, long-term availability, and enhanced security are actively highlighted as essential features that meet business and industrial demands. Through strategic partnerships and a commitment to innovation in AI and processing technology, AMD aims to support and advance enterprise workflows comprehensively.

Intel

Intel is a market leader in CPUs, expanding its influence in the AI sector with products like the Gaudi 3 chip, aiming to catch up in the GPU arena.

Leadership | About | About | Leadership | About | About

Intel's Strategic Focus and Deliverables

1) Key Focus Area:

Intel is highly focused on Artificial Intelligence (AI) innovation across various industries and technological platforms. Their efforts span from traditional areas like processors and computing hardware to cutting-edge solutions that integrate AI across data centers, cloud computing, and enterprise systems.

2) Unique Value Proposition and Strategic Advantage:

- Open Platform for Enterprise (OPEA): Intel provides a comprehensive AI ecosystem that includes silicon, software, and tools, allowing enterprises to harness AI's potential across devices from PC to edge and data centers. This strategy is aimed at various industries, including financial services, manufacturing, healthcare, and retail.

- AI-Powered Performance: Their newest product offerings, such as the Intel® vPro® PCs and Core™ Ultra processors, are tailored to enhance productivity with integrated AI capabilities, improving everything from security to computing efficiency.

- Scalability and Edge Solutions: Intel's platforms, such as the Tiber™ AI Cloud, are designed to streamline the deployment of AI solutions at an enterprise scale and across edge environments.

- Enterprise Solutions: Intel underscores the capability of their systems to glean actionable insights from data, driving automation and enhancing decision-making processes, which serves as a significant business advantage to clients.

3) Delivering on the Value Proposition:

- Comprehensive Product Portfolio: Intel offers a wide range of products including processors (Intel® Core™ Ultra and Intel® Xeon®), graphics solutions with their Arc™ series, and AI accelerators, which support AI workload demands.

- Tools and Partnerships: With resources like the OpenVINO™ toolkit for AI model optimization and the oneAPI Toolkits for unified programming, Intel caters to developers seeking efficiency and innovation. They also leverage extensive partnerships for joint solutions and industry standards.

- Enhanced Security and Manageability: Intel platforms focus on security with hardware-level protections and remote management capabilities, critical for enterprise environments.

- Empowering the Workforce: Solutions like Intel vPro® are aimed at fortifying businesses with robust security tools and optimizing device management, thus allowing tech teams to focus more on innovation than maintenance.

- Development Resources: Through its Developer Zone and resources, Intel promotes easy access to its software tools and solutions that support innovation, scalability, and performance optimization. This aids developers in crafting solutions easily across various technology stacks.

- Evidence of Impact: Intel documents case studies, such as those with financial and agriculture sectors, demonstrating their AI solutions achieving significant efficiency improvements and cost reductions.

Final Thoughts:

Intel continues to strategically position itself as a provider of diverse technological platforms and solutions, placing special emphasis on AI innovations. By emphasizing scalable and secure solutions supported by a robust hardware and software ecosystem, Intel aims to not only meet but guide the evolving demands of enterprise-level computing and AI applications.

RaiderChip

RaiderChip is developing a hardware IP core to accelerate generative AI inference on FPGA platforms, emphasizing small to large language models.

Company Focus Area

RaiderChip is a hardware design company specializing in developing neural processing units (NPUs) for generative artificial intelligence (AI). The company's primary focus is accelerating large language models (LLM) on low-cost field-programmable gate arrays (FPGAs). These accelerators aim to advance AI processing efficiency and operate generative AI models entirely locally, without requiring cloud or subscription services. RaiderChip aims to target the burgeoning edge AI market, engaging in embedded solutions capable of running independently from internet connections.

Unique Value Proposition and Strategic Advantage

RaiderChip's unique value proposition lies in offering clients a cost-effective, local AI acceleration solution that prioritizes privacy and efficiency. Their strategic advantage comes from leveraging decades of experience in low-level hardware design to deliver NPUs that maximize memory bandwidth and computational performance, essential for running complex generative AI models efficiently. RaiderChip offers versatile and target-agnostic solutions that work seamlessly with FPGAs and ASIC devices from various suppliers such as Intel-Altera and AMD-Xilinx.

Key differentiators include:

- Fully hardware-based generative AI acceleration, ensuring deterministic performance and energy efficiency.

- Capability to run models offline to ensure data privacy and independent operations, ideal for applications in sensitive areas such as defense, healthcare, and consumer electronics.

- Support for rapid integration of new LLMs and customer-specific fine-tuned AI models without hardware modifications.

Delivering on Their Value Proposition

To drive its mission forward, RaiderChip focuses on several key strategies:

-

Advanced Technology and Design: Their NPUs are specifically optimized for the Transformers architecture, which is foundational for the majority of LLMs. This allows them to process models without the need for additional CPUs or Internet connections.

-

FPGA-based Approach: RaiderChip uses the reprogrammable nature of FPGAs, making their deployed systems adaptable to emerging AI models and updates without significant hardware changes.

-

Quantization Support: By offering models with full floating-point precision and quantized variants, RaiderChip caters to diverse computational needs, providing flexibility and efficiency, especially in resource-constrained environments.

-

Local and Interactive Evaluations: RaiderChip provides interactive demos that allow potential customers to test and evaluate the capabilities of their generative AI solutions, emphasizing a hands-on approach.

-

Frequent Model Support Expansion: Aligning with market trends, they consistently add support for new models like Meta’s latest Llama releases and Falcon from TII of Abu Dhabi, enabling versatile AI applications across different languages and regions.

RaiderChip’s investments in research and development are supported by their recent seed round of one million Euros, further accelerating their growth in a field that continuously evolves with rapid technological advancements. By focusing on local AI acceleration for both performance and privacy, RaiderChip positions itself as a pioneer in generative AI deployment, particularly for applications requiring significant computation near the source of data generation or decision-making.